Anti-Patterns in Tech Cost Management: No ongoing reviews

Series Index

Introduction

Anti-Pattern 1: Not considering scale

Anti-Pattern 2: Bad cloud strategy

Anti-Pattern 3: Inability to assign/attribute costs

Anti-Pattern 4: No metrics or bad metrics

Anti-Pattern 5: Not designing it in

Anti-Pattern 6: Cost management as a standalone

Anti-Pattern 7/8: No ongoing reviews (current and potential)

Anti-Pattern 9: Across the board cuts

Anti-Pattern 10: “A tool will solve the problem!”

Anti-Pattern Bonus: Don’t do rewards programs!

A few thoughts: Three things you can do right now, for yourself and your team

Wrap up

Today I’ll deal with two anti-patterns at once, in part because they are related and in part because I took the day off and missed posting yesterday.

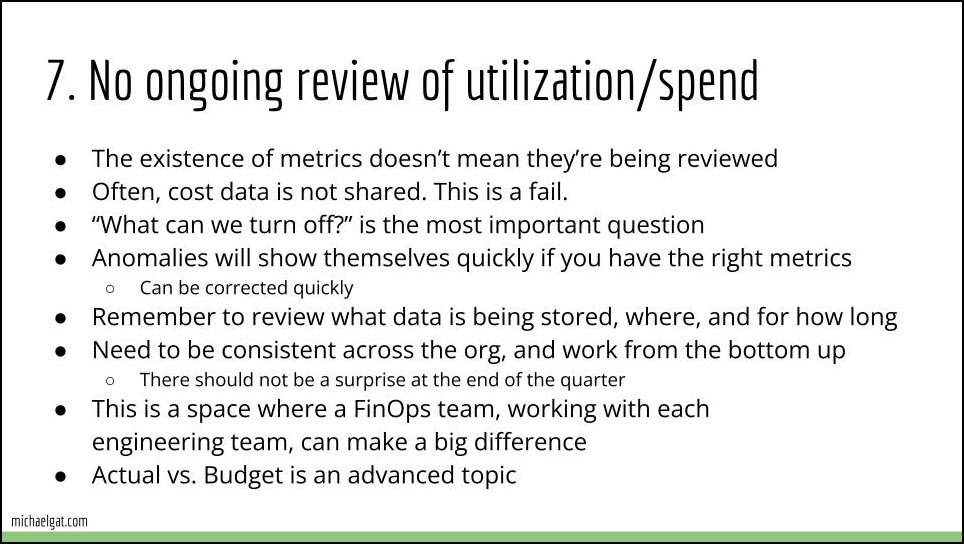

Anti-pattern 7: No ongoing review of utilization/spend

The first is a failure to review spending on an ongoing and consistent basis. All the metrics in the world don’t matter if they’re not being reviewed regularly and consistently and used to identify issues. This can be the result of a number of poor practices that I’ve observed in the field.

The first is a general reluctance to share cost data. This is a core mistake. While it may not be a good idea (or even permissible) to share details like enterprise discounts from cloud providers, it is rarely a problem to share the baseline costs of the various services consumed. These may not be completely accurate, but it is rare that they are not directionally correct. Another reason for failure to share this information is cultural — the assumption that anything involving dollars should be retained in the finance department or at most shared with senior managers. Engineering teams may know things like GB of data stored, or the number of vCPUs active, or stupid things like “cpu utilization,” but they don’t know the costs. This is at odds with anything else we want engineers to be able to act in, in which we not only ensure that they have the data, but that feedback loops are short and are designed to be acted on quickly. Cost should be managed the same way.

Beyond the “informal” review that should happen at the team level on an ongoing basis, there need to be regular, formal and consistent reviews, which will usually be run by somebody other than the engineering team. (This is where a FinOps org, or at least a finance specialist helps a lot.)

The reviews need to be:

- Regular: most companies I have talked to do this monthly, but often there is also a quarterly cadence for more senior management. How frequent this needs to be depends a lot on the nature of the business, and primarily how much changes how quickly. A large monolith will probably need less frequent review than 100 microservices all of which change dailly.

- Formal: There should be a process, and everybody should know what is being examined and why.

- Consistent: Lack of consistency is one of the things I’ve observed in organizations that don’t have any kind of formal cost management in place. Reviews may take place, and may even take place regularly, but they vary across the org, and this makes benchmarking very difficult.

A key item to include in every review, both informal ongoing ones inside teams, and the more formal reviews is explaining what is being used why, and understanding what can be turned off, or scaled back. This is where consistency matters because it will reveal all the things that are costing money but which nobody seems to own. Even in high performing organizations, I’ve often encountered unused resources that have been left hanging and forgotten. Often these aren’t even identified until something like a security review asks why this old non-compliant thing is still out there.

We should, in fact, try to approach cost reviews in the same way that security approaches their regular reviews. The effort should be to identify everything, not just “everything a team is thinking about today.”

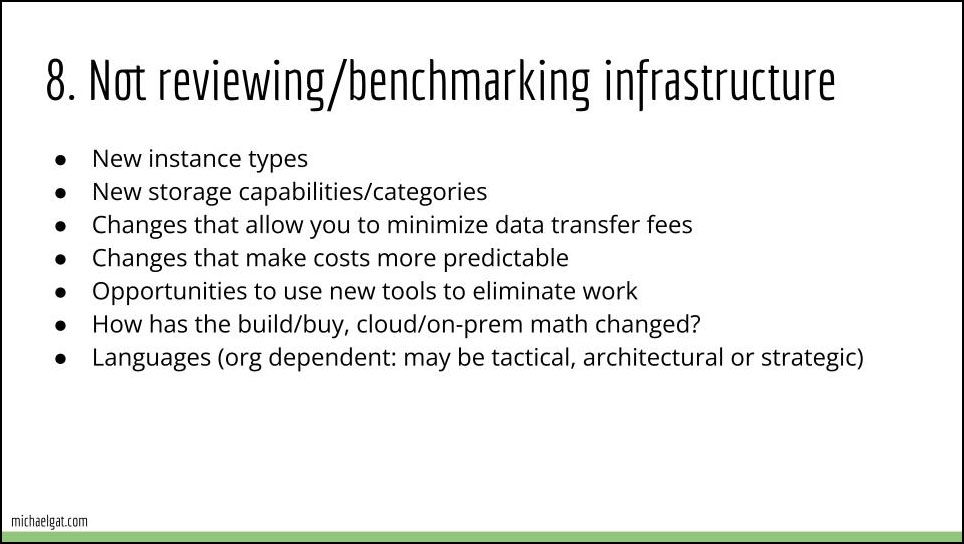

Anti-pattern 8: No regular review/benchmarking of infrastructure and tools

The second type of review is of opportunities for improvement/re-architecture. I’ve broken this off from the regular cost reviews for two reasons:

- These reviews tend to be more architecturally focused, and happen within engineering teams, not with management. (Though FinOps may be involved.)

- These reviews are less formally structured and consistent across teams, as each will focus on the capabilities that the team needs or could use.

The point of this kind of review is to ensure that we are keeping up with the latest, and employing it in our service infrastructure. This is true whether you are dealing with cloud or on-prem infrastructure.

Two years after it’s released, I still regularly see organizations that are not using S3 intelligent tiering, even though by this point using it as the default has become somewhat of a best practice. I routinely encounter organizations that are using ancient and costly compute instance families and I see this across cloud providers.

I also see the opposite: a rush to adopt new instance types or capabilities without benchmarking how they compare to previous ones from a cost perspective. (Historically, this price/performance has only moved in one direction, but recently that’s begun to change.) Most organizations benchmark for performance and assume that cost will follow. That is beginning to look like a mistake.

And of course, there are always new tools that may make sense (or may cost a lot of money and deliver nothing). The calculus of build/buy may change drastically. Even the choice of cloud vs. on-prem can change over time and should be reconsidered from time to time.

Key Takeaway

No choices are forever. Every optimization degrades. Regular review of choices and utilization needs to happen both within and across teams.

Join us at the SoCal Linux Expo (SCaLE 21x) in Pasadena on March 14-17. My talk will be on Saturday the 16th. I will also be speaking at UpSCaLE on Friday night, and running the Observability track on Saturday and Sunday.

It’s $90 for four days of great content. (If you know me, ping me as I may have a few discount passes left.)