Moving WordPress (1) – what I wanted to do

This is the first part of my writeup of everything I did to move my site from one EC2 instance to another, and make a bunch of other changes. It’s mostly documentation for myself, so it is rough and may at times not make complete sense, though I hope anybody else who tries something similar finds it useful:

Moving WordPress (1) – what I wanted to do

Moving WordPress (2) – AWS setup

Moving WordPress (3) – database and content

Moving WordPress (4) – SSL, themes, etc.

I finally moved this site, along with the other two sites hosted in the same place. It was educational, frustrating, but ultimately successful. This multi-part writeup is in part for my own records, in part for anybody else who may be attempting the same kind of thing, and in part to document some of the general AWS-related lessons I learned or re-learned as I dove head-first into several days of clickops.

I’ll occasionally get fairly detailed here. When I was looking for online instructions on how to do such a migration I found none, and had to piece it together from lots of sources, My hope is that if anybody is trying to do this, they’ll find some ideas here. If I need to do this at some point in the future, I’ll find a starting point. I won’t chronicle every experiment and dead-end, but will point it out if I had to try something more than once before I got it right, just so others can hopefully avoid some of the same mistakes.

I should start by saying that a lot of what I’ve done is absolutely not the way you should do things professionally. You should automate your infrastructure. Period. If you manage anything significant with clickops, you are entering a world of pain. I haven’t done much hands-on AWS management in years. For most of the past five years, I worked for AWS and we all were working multiple layers of abstraction below the public services that everybody knows. So I was ready for a few days of “fucking around and finding out.” I found out a lot.

Where I started

My original WordPress setup was fairly simple and mostly followed the old docs that AWS provided for doing this on previous versions of Amazon Linux. I had a single EC2 instance (t2.small), with the LAMP stack installed. There was a single EBS volume that contained everything: the OS, the rest of the LAMP stack, the WordPress PHP application, and the various content (mostly photos) that I had uploaded. It all lived in a VPC with a single subnet. I had periodically updated both the WordPress application and the underlying stack, but due to conflicts with some of the bits of WordPress, I had been stalled on PHP 7.4, which is long-deprecated. Attempts to upgrade to 8.0 had previously failed for reasons I had never bothered investigating in detail.

My aging MariaDB server, (Which I used in place of MySQL in the LAMP stack) had also not been updated in some time, though there didn’t seem to be any issues with the data.

What I wanted to do

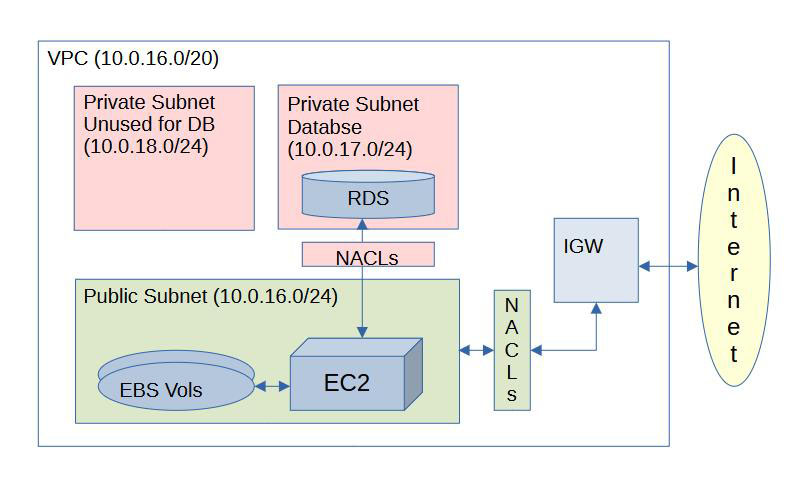

I wanted a more robust, though not really scalable environment with distinct database and web servers, in appropriately-secured subnets within a single VPC. I wanted to move the database to a managed AWS RDS instance rather than rolling my own MariaDB and taking on the responsibility for maintaining it. While I was not looking for complex and expensive mult-AZ setups, and won’t assume any need for horizontal scaling, I did want a VPC environment that was robust enough that I could add other capabilities over time. I’ve already been thinking about writing some Lambda functions to allow me to automatically resize and watermark images when I upload them to S3, and then make them available to the WordPress application. That will be something for future updates.

VPC/Network

I’ll have a single VPC with 2 subnets that are in use and one more that RDS requires, that will not be used. The public subnet (10.0.16.0/24) will contain the web server, with two connected EBS volumes. The private database subnet (10.0.17.0/24) will contain a single RDS instance. The unused private alt database subnet (10.0.18.0/24) is required when you set up an RDS instance. I presume AWS requires this to avoid a situation where you want to go multi-AZ later and can’t, because changing the subnet association of an RDS instance is not possible. I had forgotten this, or quite possibly never quite noticed because I don’t think I ever have set up a single-AZ database before. This last subnet will have a Network Access Control List (NACL) that blocks all traffic in and out and will be effectively unavailable for anything.

Web Server

There will be an EC2 instance appropriately sized for use by these sites only. I’ve chosen a t4g.micro, which uses AWS’s Graviton architecture in place of the Intel architecture I had previously used. I’ve been slowly moving a lot of my work to ARM, and it’s cheaper for the capabilities. The original server was bigger (t3.small) as it hosted the application, data and the database and was probably still overkill. I also used it at times for random other experiments. I won’t do that again, as the database is going to be separate, image storage will likely move to S3 at some point, and most other workloads I run have moved to serverless. Putting a lot of different things, even just the application and database server on the same instance makes troubleshooting a pain. Adding random other workloads compounds this.

I will be more careful about organizing my data, both for maintenance and backup purposes. I’ll have two EBS volumes. One will be /root, containing all the normal Linux stuff, applications, etc. The second volume will be mounted to /html and will contain everything for the three websites I’m running and any others in the future. In a more perfect world I’d have one volume per website, but the size of these sites is pretty tiny. If I ever need to move one of them, I can copy the whole volume, then keep the parts I need.

Given the infrequency of changes, and the relatively easy re-creation (or re-uploading) of data, I’ll be content with snapshots a couple of times a week for my limited but cheap backup strategy. (Don’t try this at home! Or do. But definitely don’t try this at work.)

Database

The RDS instance is a db.t4g.micro. Again, I went for the Graviton/ARM option, running in the same AZ as my web server. I chose to give it 20GB of storage which is an order of magnitude more than the original database used after cleanup. It can autoscale up to 60GB. I can dream of ever needing that much. The WordPress database mostly contains text and metadata, so it’s never going to be very large. Again, I’ll use snapshots as a cheap but limited backup strategy.

Other things

I want access only via https, so I’ll need to set up certificate management, rewriting http to https, and a few other bits to make it work. Certbot addresses these.

How to get there

To get from where I was to where I wanted to be, I’d have to:

- Switch AZs. The EC2 and RDS instance types I wanted were not available in the AZ I was originally running in. This would mean some cross-AZ transfer charges but not much, given the amount of data I’d be moving.

- Set up VPC, networking and network security in the new AZ. My original VPC was small (/24) with only a single subnet consuming all IP addresses. Even if the AZ issues had not come up, reusing it would not be possible.

- Set up EC2 instance with LAP stack. (LAMP minus the MySQL piece)

- Set up RDS instance.

- Move the database(s) from my self-managed and out-of-support MariaDB application, into the new RDS instance. To simplify everything and minimize costs, I decided to clean up the database first.

- Move the WordPress application files and all uploaded content including themes and WordPress plugins to the new subdirectories on the new server. Again, this would be cross-AZ, but otherwise not complex. Or so I thought.

- Set up TLS termination, certificate management, and https forwarding on the new host. This requires setting up CertBot, and making changes to the Apache config files.

- Future ideas:

- Move image storage from EBS to S3. This wasn’t an option when I set the site up, but there are now WordPress plugins to support this.

- Create automatic image sizing and watermarking upon upload to S3. This will involve adventures in Lambda and image management in Python, neither of which I’ve done for a while, so it should be fun.

- Front the whole thing with an Application Load Balancer (ALB) in a new public subnet. It could then handle certificate management in place of CertBot, and allow me to make my web server subnet private. This is wildly overkill, but could be fun to play with. Besides, I used to work for AWS load balancing and it would be nice to finally use one of my old team’s products for something.

Once you move all the images to S3, you can put them behind CloudFront for even more overkill. No, no, no! Now you’re being stupid. This isn’t Twitter for Pets.

In Part 2, I’ll start with the VPC setup.