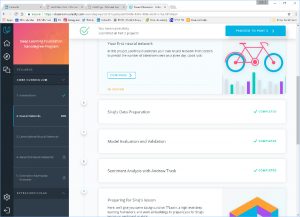

Udacity Deep Learning Nanodegree – Part I

As I mentioned yesterday, I’m about three weeks in to the Udacity Deep Learning Nanodegree program. I’ve just resubmitted my first project, and thought this would be a good time to pass on my thoughts to anybody who is following the #SoDS17 items.

For starters, I’ll be honest. There is little here that I could not learn on my own. But I find that it’s useful to learn along with others, and the structure that programs like this provide can be useful, so long as it isn’t too expensive. There is also some (limited) professional benefit to being able to say that you went through a program that is at least somewhat graded as opposed to telling others what you did and asking them to look at your github account for evidence. For myself, the structure and ability to discuss issues and problems with others were the key things that made this summer’s effort worth the $600.

Who is Siraj Raval?

Honestly, I have no idea who he is. I’m not much of a fan of videos in general, so I seemed to have missed the fact that there is apparently a de-facto YouTube Data Science video star out there. In any case, it doesn’t much matter as it seems that he lent (or probably, rented) his name to Udacity. Some of his videos are included in the course, but otherwise he doesn’t really have much to do with it. It seems that there are some occasional online sessions with him, but if you’re thinking you and a few hundred other people are going to be interacting with him regularly, you’ll be disappointed. It doesn’t matter much to me, because while I find his videos mildly entertaining, I don’t find them to be all that informative. Your thoughts on this may vary depending on your own learning preferences.

The rest of the instructional material seems well put together, nicely organized and very informative. There are a number of instructors and they go back and forth. As with any such situation, some of them resonate better with me than others. Some videos are really well done, and some are much harder to digest — sometimes just a person talking over a screen full of code showing examples. There are usually code samples to go along with the video lectures and presentations.

It’s quite well organized, with each lesson leading you to the next, and exercises mixed in. Some of the exercises are online in the Udacity site and are evaluated immediately upon completion. I have found some of these to be a bit tedious, if for no other reason than the checking system seems to do little more than compare your code to the desired code snippet, and even minor tweaks like changing variable names to make more sense to me can throw the system off. So it’s imperfect, but gets the job done, and if I want to explore further I have to take it offline into either a Jupyter notebook or the Editor/IDE of my choice. (Currently Spyder.)

First two weeks

Week one was mostly introductory with not much real work. I probably got it easier than many because there’s a lot of setup that I had already done: the Anaconda environment that includes Python, git/github, TensorFlow and a handful of other things. Other than TensorFlow I’ve been using all these tools regularly and could mostly skip through. I was also quite comfortable with the math and stats that they covered briefly. Instead, I jumped right into the fun which was running some demonstration programs using the Tensorflow environment. One of these made it possible to overlay a “style” from some existing piece of art onto a photo. So, for example, I could remake my cats’ photo in the style of Munch’s “The Scream,” or as a Japanese woodcarving, or any other number of things. The learned “styles” were given, so we didn’t go through the exercise of writing code to create our own, just applying them, but the potential is obvious.

There were a couple of other applications we got to play around with, like a traffic/self-driving vehicle simulator that we could train, and a game playing simulation. For the first week though, it was all observing and using rather than building, which was a fine introduction to the capabilities.

By week two, things heated up a bit, with the first project due at the end of the week. Udacity weeks run Wednesday to Wednesday, so weekends when many people are doing the bulk of their work come “midweek” in each lesson. It seems to work well. We jumped into neural networks with a refresher on vectors and matrix math. It was good, but very basic and I’d strongly recommend that anybody who wants to take this course should first go through the Khan Academy Linear Algebra class. It’s much more informative and much better produced than the short intro here. I took the time to go through it a couple of years ago and don’t regret the time it took. The first project is also introduced early in the week. It requires building a simple neural network to predict bike-share usage based on an existing dataset. Much of the functionality is similar to code built up in other exercises during the week.

For the project, NumPy is used to build a very simple (three layer) neural network similar to one that is presented during a series of lectures by instructor Andrew Trask. When that is done, we explored more complex topics, got an introduction to TensorFlow and built a simple deep learning library called MiniFlow. Along the way, there were a lectures from Siraj about sentiment analysis, data preparation and neural networks. All these are available free on his YouTube page as well.

Project 1

I find it helpful to build things from scratch as a method of learning. For example, I will probably never need to write a sort algorithm in my life; why would I when there are so many great sort packages out there for every programming language in existence? But the fact that I was forced to learn the various sorting mechanisms, develop the data structures required to implement them and the programs to run them, means that I will always understand sorting at a very fundamental level. That makes me a much better user of those pre-built sort packages as I have at least some clue of how they work inside. To this day, when I learn a new language, I’ll sit down and write a b-tree for no reason other than I find that if I can do that reasonably well, I probably understand the language well enough to move forward. If you can write a b-tree, you know what’s going on.

With that in mind, I was quite happy that the first project, and a significant piece of the week, was writing simple neural nets from scratch. Much of the “wrapper” code is provided, either in the online work environment or in a Jupyter notebook, but you have to understand the core functionality you’re implementing, the data structures and the operations in order to make it all work. I find this far, far better than the more common approach employed by many bootcamps, which offers a brief theoretical lecture, then moves to a nicely packaged library that does things you really don’t understand. The bike-share exercise was reasonably interesting, and left me with a few questions and some things I want to try in order to get a better result. I got dinged a bit on my initial submission for not trying to run my network with many thousands more iterations, so I made a change, resubmitted it and got a prompt response that I met all the requirements. From what little I’ve seen so far, feedback is prompt and helpful.

Other stuff

The Slack channel, with hundreds of people participating, is a bit overwhelming, but I’ve slowly gotten used to skimming through for items that are either of particular interest or for which I may have some feedback to offer. There are also discussion forums on the Udacity site for the individual projects and a handful of other topics. I think the use of Slack is relatively new and Udacity may still be trying to figure out what should go in Slack vs. the web forums, but so far the available information is good.

More to come next week.

Hope you’ve also enjoyed my photos!