Whither the mainframe

I’m going to record the ChangeLog Ship It! podcast tomorrow, hoping to give a bit of historical flavor to how we used to do things, focusing on my earliest years. I expect that some of this will relate to mainframes, which I touched for a few years early in my career, though by that point, I mostly used mainframes in the context of “how do we do things with PCs that we can’t do with mainframes, and have it all work together?”

I thought I’d write up a bit on one of the least understood bits of tech that I used in my earliest years.

The context

Mainframes are a highly opinionated solution to processing large quantities of sequential data reliably with high availability and at low cost per transaction. The architecture allows the central processing unit to focus on the core work, and outsource everything else to controllers, subcontrollers, and a variety of attached systems. You give up a lot to get this. User interfaces (the old “green screen”) are rudimentary because the CPU deals with a limited number of data elements at a time, processed when you hit the “enter” key. On mainframes “enter” and “return” are different keys. The former results in action by the CPU and the latter only involves the monitor and its controller. The only thing the CPU is concerned with are the pre-defined data fields it sends down to the display controller, and the ones it gets back.

As with most things in tech, the “opinions” embedded in the architecture reflect the technical realities of the time. There were no cheap microprocessors, memory or storage. IBM (and the other computer companies that existed at the time) leased a lot of the hardware they made, and billed by the CPU cycle, so offloading as much as possible from the CPU and billing around “real work done” made the arrangement more palatable. The size of components, the amount of power and cooling required, and the scale of the physical infrastructure needed to house them all made “all in one” unthinkable.

The PC emerged as an opposed way of thinking. It reflected microprocessor technology that didn’t exist until two decades after IBM introduced it’s first computer, the existence of cheap RAM, and relatively commodified secondary storage. Read the early writings and it’s clear that it also reflected a different overall philosophy: the individuality of the 1960/70s rather than the centralized corporate and social controls of the 1940/50s. As such, it is not only architecturally different, it represents a different opinionated worldview.

The PC assumed that you could have everything you need on your desktop to perform individual tasks and need nothing that was owned or controlled centrally. Opinions like Robert Stallman’s are only a slightly extreme version of what early PC visionaries thought computing should be: individual, and controlled entirely by the user. The ability to eventually link PCs together and connect them to centralized PC-based servers, or clusters was layered on later. Today, we are able to put together mainframe-level processing power by networking PCs together, and splitting up the work horizontally.

A key difference is scaling. Mainframes start at large scale — the entry point is several millions of dollars — and scale vertically. Internally, there’s a lot of parallelism in today’s mainframes. A Z16 mainframe can have up to 240 CPUs, in “drawers” of 4 CPUs. These run in independent partitions, and are even hot-swappable. But to a developer/operator they can be configured to appear as a single massive, superfast CPU. (You can also multi-thread if you need or want to, though it was not an original capability.)

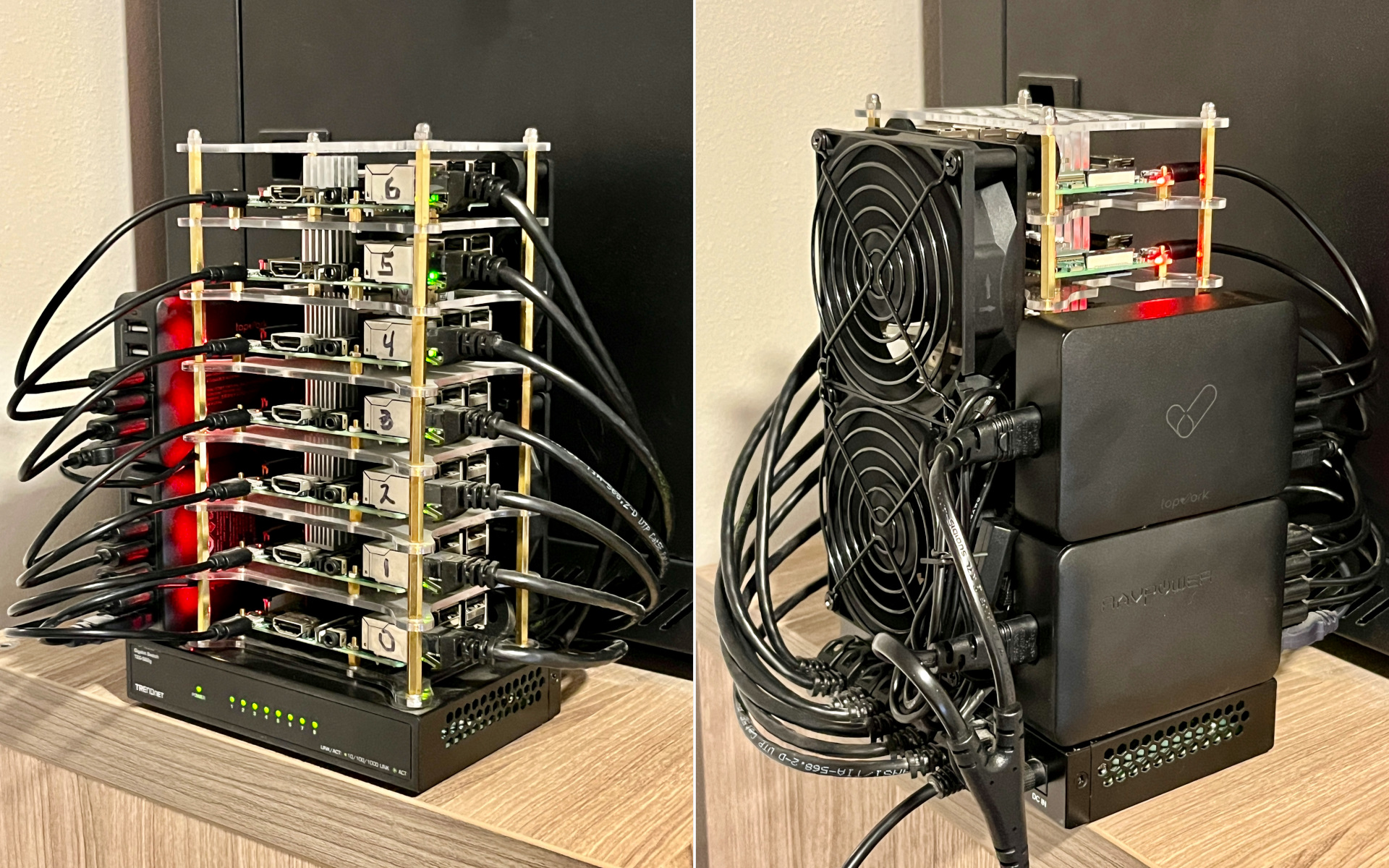

Servers that we’re all familiar with today, whether “PC based” (aka “x86”) or anything else, start small — they can be as small as a Raspberry Pi — and achieving mainframe-level power requires horizontal scaling. Obviously, if you’re running a cluster of Raspberry Pi 3s, as I am, you should probably consider some vertical scale-up before you scale out! But the power of these modern architectures is the ability to scale horizontally across dozens or hundreds of instances. Software written for this environment takes advantage of parallelism, avoiding a single bottleneck

The impact

This makes for some interesting differences, and has strongly influenced how we engineer our code to use the design opinions that are embedded in the architecture. It’s also influenced who uses them, and why fewer and fewer companies employ mainframes, even when on the surface they might appear to make financial sense.

Mainframe applications have historically been written procedurally, to process data sequentially. This is important in many situations, particularly those where the outcome of one transaction may impact the next one. (For example, the results of “process big deposit before big payment” may be very different from “process big payment before the deposit,” to use a really over-simplistic situation.) This need for maintaining sequence can be handled in clusters, but requires the software engineer to explicitly address it. IBM mainframes do this natively (they also do a bunch of other things, like encryption, natively)

This is part of the reason moving off a mainframe is so hard. The languages are not hard to understand, though often the decades of complex requirements can be difficult to untangle. But you can’t just port the code, because the highly-opinionated architectures they run on are so different. Somewhere in the middle of my career I spent a few years moving mainframe systems to more modern architectures. Taking monolithic procedural code and figuring out how to turn it into something that can be parallelized across multiple hosts — having to hold two diametrically-opposed, highly-opinionated ways of thinking in my head at the same time — is one of the most difficult things I’ve ever had to do. It almost always made sense to either figure out a way to keep it going, toss it all and rewrite completely for the new environment, or find some commercial package that was “good enough” to susbstitute for what you had. In my opinion, much of the early success of SAP, Peoplesoft (RIP), Siebel (RIP), Oracle, and other business software companies was their ability to be a “good enough” replacement for in-house mainframe applications that were too complex, or too poorly-understood to port.

Mainframes are insanely backward-compatible

Working at AWS, I got used to thinking hard about backward compatibility and not breaking the customer experience. Linus Torvalds holds the same view. That’s not new. In the mainframe world, IBM takes that approach to extreme lengths. If you’re able to find code written and compiled on a mainframe 50 years ago, you can run the same binary today with no modification and get identical results, despite 50 years of evolution to the underlying hardware and operating system.

I can’t think of any other architecture that is so consistent. One of the interesting problems in AWS’s billing systems is that they process quadrillions of small billing events per month every one of which may require multiple floating-point operations before being aggregated into a total. Changes to the floating point handling in different processor architectures and languages mean that differences can creep in. Usually this isn’t big enough to be material but when you’re dealing with billions of calculations a day, it can be. Even when it’s not it makes testing, validation and auditing a challenge. On a mainframe the results will be the same. IBM made, and has stuck to, a lot of very opinionated choices including support for fixed-point math, that make transitions away from it difficult.

Why we won’t see many (any?) new mainframe users

Legacy code makes it hard to move off a mainframe, but makes it even harder to move the other way. Absent an existential threat, you don’t walk away from a decade of code and systems that work, to try something completely different with huge startup costs.

I spent about 6 months working at Stripe during my hiatus from AWS. I was impressed with their tech. Stripe started out as a payments processor for businesses that couldn’t afford the complexity of integrating traditional payment systems. To support this, they were cloud-native, running exclusively on AWS. They were more expensive than other payment processors on a per-payment basis, but the ease of setup and maintenance over time, made them the choice for everybody who didn’t have a large and technically adept team to handle payments integration. Only later did Stripe have to compete with large banks whose core payments platforms are running on mainframes.

I was the first of several people in a row that Stripe hired to try to address the cost problem, but I quickly ran into the “Corey Quinn Rule” that states “cost is architecture.” I encountered the same at AWS billing, and the quadrillions of events per month that go into calculating monthly bills. This is the kind of thing that mainframes excel at but can be a struggle on other tech.

Both Stripe and AWS billing are (to the best of my knowledge some time later) working on re-architecture programs to address both cost and throughput, but they are evolutionary and are certainly not going to move away from their core infrastructural/platform choices. Throwing away everything to go all-in on a costly and arguably outdated architecture is not going to happen.

At the other end of the spectrum, startup cost are high enough that it will rarely make sense to start a new business on a mainframe. Cameron Seay notes in his Changelog interview, that the entry point is over $2m before you include any software. You’re not going to do that as a startup with uncertain growth or even survival prospects.

So, if you’re not going to start a business with a mainframe, and it’s difficult to switch tech once you’re established, the number of customers isn’t likely to grow. It also means that until something else comes along, the companies that already have them and are big enough to justify them, may have an advantage in terms of cost per transaction, which could further entrench monopolies that should be disrupted.

But the people…

There’s a shortage or mainframe people, but I don’t think that’s fatal. Mainframes may fade away for a variety of reasons, but lack of technical skills is not one of them. Lack of domain expertise is the real thing that walks out the door as baby boomers retire, but that’s true no matter what tech they’ve built on. Mainframes are the most extreme problem because they are so long-lived and backward-compatible that there can be decades-old code, while newer architectures have probably had a re-write or two in the past 20 years.

Cameron Seay is one of a handful of people who teaches mainframe skills. By his own admission, it’s not a field for people with CS degrees looking for interesting technical challenges. He has always taught at HBCUs and focuses teaching people from non-traditional backgrounds, for whom a stable high-5 to low-6 figure job with a bank or government agency is a major step up. I wrote a while back that the problems mainframes historically solved often required generic problem solving more than detailed tech knowledge. People who can solve business problems can learn COBOL easily enough if there’s incentive.

Cameron’s interview is worth listening to.

Finally, an urban legend

There is an often-repeated myth that IBM CEO Thomas Watson said in 1943 that “I think there is a world market for maybe five computers.” But there is no record of that ever being said. And it’s unlikely he would have said it at that time, since IBM didn’t enter the computer market until a decade later. The legend has persisted over the years despite frequent debunking, and was listed as the worst tech prediction of all time.

The only possible source for this rumor was a shareholder meeting in 1953, when Watson commented about a roadshow they had put on for their first scientific computer. He said that “as a result of our trip, on which we expected to get orders for five machines, we came home with orders for 18.” They would sell 19 of the initial model 701, and over 1000 of the derivative general-purpose model 650 that followed a year later.

So no, Watson didn’t say it. Bill Gates didn’t say that 640k of RAM would be enough for anybody either.